The ability for computers to recognise novel images – to ‘see’ – is perhaps the most exciting side of AI.

But while most of the hype is centred around computer vision in self-driving cars, there are already plenty of implications for marketers.

Here are 10 of them…

1. Smarter online merchandising

Merchandising in ecommerce is traditionally all about tagging. Each product has numerous tags, which allows the customer to filter for particular attributes, but also allows recommendation algorithms to surface related products (these algorithms may also analyse behavioural and purchase data).

Online retailers can also override these algorithms if they want to surface a particularly important product – perhaps something new.

However, AI-based software such as Sentient Aware is now allowing for visual product discovery, which negates the need for most metadata and surfaces similar products based on their visual affinity. This means that as an alternative to using a standard filtering system, shoppers can select a product they like and be shown visually similar products.

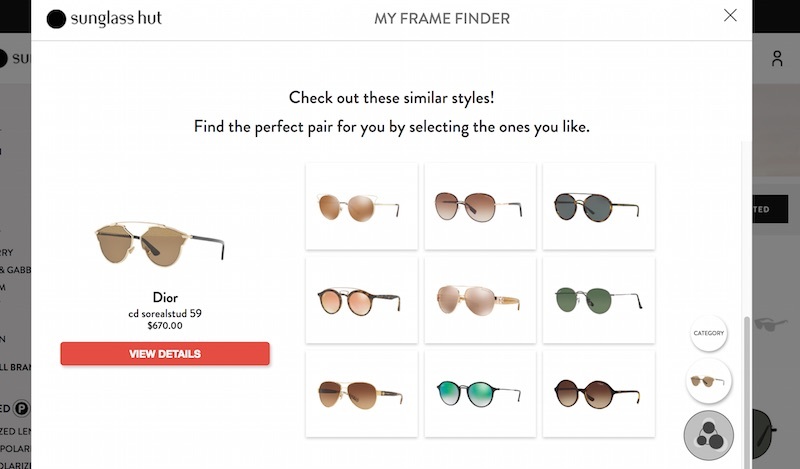

The screenshots below from Sunglass Hut shows how this experience might look to the customer.

Sunglass Hut uses Sentient Aware to power its visual product discovery

The advantages of visual product discovery are many fold. It can surface a greater proportion of a product catalogue and find products in separate categories that a customer may not have otherwise encountered (e.g. a ‘sports’ shoe that looks similar to one from the ‘lifestyle’ category).

Where once online retailers had to decide between selling a focused/curated selection of products or a larger range, visual product discovery allows the best of both worlds.

2. More effective retargeting

The same technology used for merchandising can also be applied to retargeting.

Retargeting site visitors with display advertising for a single product (perhaps after cart abandonment) is effective but can often be a blunt tactic.

Dynamic creative that features a range of visually similar products may have more success, especially as retailers may be unsure if a customer has already bought a specific product offline.

3. Real-world product & content discovery

Pinterest has only recently launched a tool called Lens, which functions like Shazam but for the visual world. This gives the consumer the ability to point their smartphone camera at an object and perform a Pinterest search, to surface that particular product or related content.

This could be used to find a supplier of a piece of furniture you see, or a recipe for an exotic unknown vegetable.

The social network has had a form of visual search functionality since 2015 (as has Houzz), allowing users to select part of an image and search for related items, but has expanded this further with Lens, as well as allowing brands to surface products found within photographs.

So, if a user is looking at a Pin of an interior which features a particular chair, for example, a retailer could potentially provide a link to purchase the chair.

4. Image-aware social listening

Brands chiefly monitor social media for mention of their products and services. But text forms only a part of what social media users post online – images and video are arguably just as important.

There are already companies (such as Ditto or gumgum) providing social listening that can recognise the use of brand logos, helping community managers find good and bad feedback.

Subtweeters, beware.

5. Frictionless store experiences

Amazon Go hit the headlines in December 2016. Customers enter the store via a turnstile which scans a barcode on their Amazon Go app.

Computer vision technology then tracks the customer around the store (presumably alongside some form of phone tracking, though Amazon has not released full details) and sensors on the shelves detect when the customer selects an item.

Once you’ve got everything you need, you simply leave the store, with the Go app knowing what you have taken with you. Amazon’s concept store in Seattle seems to have paved the way for computer vision to play a role in removing the pain from the checkout experience.

6. Retail analytics

Density is a startup that anonymously tracks the movement of people as they move around work spaces, using a small piece of hardware that can track movement through doorways.

There are many uses of this data, notably in safety, but they include tracking how busy a store is or tracking how long a queue / wait time is.

Of course, automated footfall counters have been available for a while, but advances in computer vision mean people tracking is sophisticated enough to be used in the optimisation of merchandising.

RetailNext is one company which provides such retail analytics, allowing store owners to ask:

- where do shoppers go in my store (and where do they not go)?

- where do shoppers stop and engage with fixtures or sales associates?

- how long do they stay engaged?

- which are my most effective fixtures, and which ones are underperforming?

7. Emotional analytics

In January 2016 MediaCom announced that it would be using facial detection and analytics technology developed by Realeyes as part of content testing and media planning.

The tech uses remote panels of users and works using their existing webcams to capture their reactions to ads and content.

Realeyes CEO Mikhel Jaatma told Martech Today that emotional analytics is “faster and cheaper” than traditional online surveys or focus groups, and gathers direct responses rather than drawing on subjective or inferred opinions

Other companies in the emotional analytics space include Unruly, in partnership with Nielsen.

8. Image search

As computer vision improves, it can be used to perform automated general tagging of images. This may eventually mean that manual and inconsistent tagging is not needed, making image organisation on a large scale quicker and more accurate.

This has profound implications when querying large sets of images, as Gaurav Oberoi suggests in a blog post, a user could ask the question “what kinds of things are shown on movie posters and do they differ by genre?”, for example.

Eventually, when applied to video, the data available will be mind boggling, and how we access and archive imagery may fundamentally change.

Though this is still a long way off, many will already be familiar with the power of image search in Google Photos, which is trained to recognise thousands of objects, and with doing a reverse image search within Google’s search engine or in a stock photo archive.

9. Augmented reality

From Snapchat Lenses to as-yet commercially unproven technology involving headsets such as Hololens, augmented reality is increasingly mentioned as a possible next step for mobile technology.

Indeed, Tim Cook seems particularly excited about it.

10. Direct mail processing

I had to make my list up to 10, so I thought I’d include a rather more old school but essential use of optical character recognition (OCR).

Royal Mail in the UK spent £150m on an automated facility in 2004, near Heathrow, which scans the front and back of envelopes and translate addresses into machine-readable code.

This technology enables next day delivery at scale.

There are plenty of other prospective uses of computer vision in UX, perhaps most notably including facial recognition and its many applications. Think I’ve missed anything important? Add a comment below.

Comments